AWS Outage Analysis: How Amazon's 15-Hour Downtime Exposed Critical Infrastructure Vulnerabilities | October 2025

When 30% of the Internet Goes Dark: My Analysis of the AWS Catastrophe

Monday's AWS outage reminded me why I always tell my business clients: "Cloud doesn't mean bulletproof." When Amazon Web Services went down for 15 hours starting October 20, it took with it Signal, Snapchat, Fortnite, Robinhood, and over 1,000 other services that form the backbone of digital commerce.

From my experience managing cloud migrations, this wasn't just a technical failure — it was a wake-up call about the dangerous consolidation of internet infrastructure under a handful of providers.

The Technical Reality Behind the Chaos

The Root Cause: Amazon attributed the outage to "an underlying internal subsystem responsible for monitoring the health of our network load balancers" — essentially, their monitoring system couldn't handle the load it was designed to monitor. As someone who's implemented these exact systems, the irony isn't lost on me.

The Cascade Effect: The failure originated in AWS's US-EAST-1 region (Northern Virginia), which handles roughly 30% of global internet traffic. As I've warned clients, this single failure point represents a systemic risk that most enterprises underestimate entirely.

The Financial Impact: With DownDetector reporting over 11 million user complaints and Tenscope estimating billions in lost revenue, this outage demonstrated why I recommend multi-cloud strategies for mission-critical applications.

What Happened to Critical Services

From my analysis of the affected platforms:

- Signal: The encrypted messaging app went completely dark, raising democratic concerns about communication infrastructure dependency

- Snapchat: Over 13,000 users reported issues, highlighting social media's cloud vulnerability

- Financial Services: Robinhood and Coinbase users couldn't access trading platforms during active market hours

- Enterprise Tools: Slack, Zoom, and other business-critical applications failed simultaneously

The Government Response: Even UK government services (Gov.uk) and banking systems across Europe were impacted, proving that "critical infrastructure" extends far beyond national borders when cloud dependency is involved.

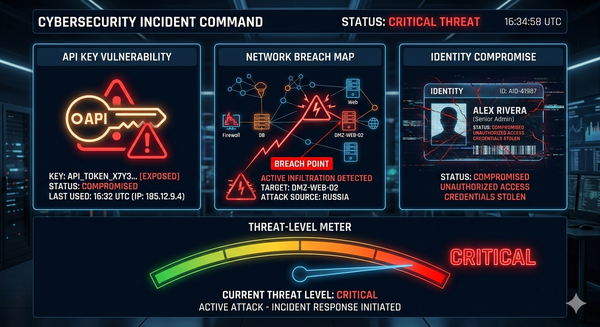

The Supply Chain Attacks I'm Tracking This Week

While everyone focused on AWS, cybercriminals stayed busy with sophisticated supply chain attacks that I believe represent the real long-term threat:

1. Adobe Commerce Under Active Exploit

The Vulnerability is CVE-2025-54236 (SessionReaper), a critical 9.1 CVSS flaw that allows customer account takeovers through the Commerce REST API.

The Reality: Sansec reported over 250 attack attempts in 24 hours, with 62% of Magento stores still vulnerable six weeks after disclosure. In my consulting work, I see this pattern repeatedly — organizations treat e-commerce platforms as "set and forget" infrastructure.

2. VS Code Extensions Compromised by "GlassWorm"

The Threat: A self-propagating worm spreading through Visual Studio Code extensions on the Open VSX Registry and Microsoft Extension Marketplace.

Why It Matters: Developers are the new high-value targets because they can access source code, deployment pipelines, and production systems. From my experience securing DevOps environments, I believe this attack vector is particularly concerning because it implicitly targets the tools developers trust.

3. North Korean APT Targets Defense Contractors

Operation Dream Job Continues: ESET researchers documented attacks targeting European UAV manufacturers, suggesting North Korea's escalating drone program ambitions.

The Sophistication: Using malware families like ScoringMathTea and MISTPEN to steal proprietary manufacturing knowledge — precisely the kind of intellectual property theft that can shift geopolitical power balances.

My Recommendations for Enterprise Resilience

Immediate Actions (Complete this week):

- Multi-Cloud Strategy: If you're running mission-critical applications on a single cloud provider, you're gambling with business continuity. Implement active-passive failover across AWS, Azure, and GCP.

- Dependency Mapping: Create comprehensive maps of your third-party service dependencies. The AWS outage showed how services you don't directly use can still bring down your applications.

- Developer Environment Security: Implement code signing and integrity checking for all development tools and extensions after the VS Code compromise.

Strategic Implementations (30-90 days):

Based on my experience with incident response, organizations must shift from "cloud-first" to "resilience-first" thinking. This means:

- Edge Computing Deployment: Reduce dependency on centralized cloud regions

- Offline Capability Planning: Critical business functions must operate during cloud outages

- Supply Chain Security Program: Treat open-source dependencies and cloud services as potential attack vectors

The Bigger Infrastructure Problem

What worries me most about this week's events is the pattern: AWS controls 30% of cloud infrastructure, while Microsoft and Google control most of the rest. This oligopoly creates systemic risks that individual companies can't solve alone.

The AWS outage lasted 15 hours. In my experience, that's catastrophic for any business that hasn't planned for extended cloud provider failures. Yet most organizations I consult with have recovery plans measured in minutes, not hours.

What I'm Telling My Clients

- Assume Extended Outages: Plan for 24+ hour cloud provider failures, not just brief interruptions.

- Diversify Critical Dependencies: No single vendor should control more than 60% of your infrastructure.

- Monitor Supply Chains: The Adobe Commerce and VS Code attacks show that your applications are only as secure as their least-secure dependency.

- Implement Zero-Trust for Developers: Developer tools are now primary attack vectors requiring the same security rigor as production systems.

Bottom Line: This week proved that our digital infrastructure is more fragile and interdependent than most organizations realize. The companies that survive the next major outage will be those that planned for systemic failures, not just isolated incidents.

Reference Sources:

- TechRadar: "Massive Amazon outage takes down Venmo, Snapchat, Alexa, Reddit, and much of the internet"

- NBC News: "Major AWS outage takes down web services like Snapchat and Ring"

- TIME: "Major Global Outage Impacts Amazon and More: What to Know"

- The Hacker News: "Over 250 Magento Stores Hit Overnight as Hackers Exploit New Adobe Commerce Flaw"

- BleepingComputer: "Hackers are actively exploiting the critical SessionReaper vulnerability"

- SecurityWeek: "Cybersecurity News, Insights and Analysis"