The AI Memory Crisis Is Here: What the Global Wafer Shortage Means for Your IT Budget and Infrastructure Strategy

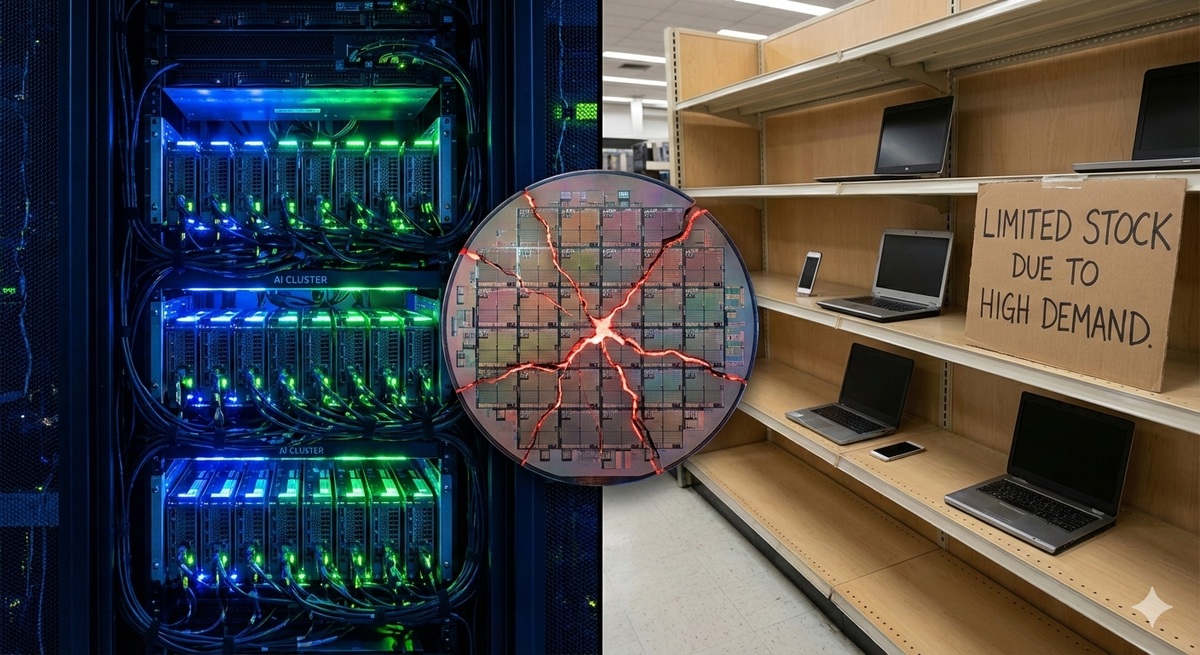

Every IT budget you planned for 2026 may already be wrong. The artificial intelligence boom that has dominated headlines for three years has quietly created a structural crisis in the global memory supply chain, and this week it stopped being quiet about it. Samsung announced it has shipped the industry's first commercial HBM4 memory, Bloomberg reported that the AI-driven memory shortage is now a full-scale chip crisis, and IDC warned that PC shipments could contract by as much as 9% this year as DRAM prices surge past anything the industry has seen in a quarter-century. If you manage technology infrastructure, procure hardware, or set IT strategy, this is the week the math changed.

Samsung's HBM4: A Technological Milestone That Deepens the Problem

On February 13, Samsung Electronics announced it had begun mass production and customer shipments of HBM4 — the fourth generation of high-bandwidth memory — marking an industry first. The specifications are impressive: a sustained transfer speed of 11.7 Gbps (46% above the industry standard), bandwidth reaching 3.3 terabytes per second through 12-layer stacking, and a 40% improvement in power efficiency over previous generations. Samsung achieved this by adopting its most advanced 6th-generation 10nm-class DRAM process alongside a 4nm logic die — a leap that bypassed conventional incremental design approaches.

Most of Samsung's HBM4 output will be installed in NVIDIA's next-generation Vera Rubin AI accelerator platform, expected in the second half of 2026. AMD has also listed HBM4 for its Instinct MI430X. Samsung projects its HBM sales will more than triple in 2026 compared to 2025, with HBM4E sampling planned for the second half of this year and custom HBM samples reaching customers in 2027.

Why a Better Chip Makes Things Worse for Everyone Else

Here is the uncomfortable reality that the HBM4 milestone illustrates. Every wafer allocated to an HBM stack destined for an NVIDIA GPU is a wafer denied to the LPDDR5X module in a mid-range smartphone, the DDR5 DIMM in a corporate workstation, or the SSD controller in a storage array. HBM is extraordinarily resource-intensive — one gigabyte of HBM consumes roughly four times the manufacturing capacity of standard DRAM, while GDDR7 requires 1.7 times the capacity. As Samsung, SK Hynix, and Micron deliberately shift production toward high-margin AI memory products, the supply of conventional memory is being structurally reduced.

TrendForce now projects that AI workloads will consume approximately 20% of global DRAM wafer capacity in 2026, driven by demand for HBM and GDDR7. This is not a temporary allocation spike. It is a permanent rebalancing of the world's semiconductor manufacturing priorities.

The Numbers: A Price Shock Unlike Any in Recent Memory

The downstream effects are already measurable and accelerating. TrendForce's February 2026 analysis revised conventional DRAM contract price projections sharply upward: quarter-over-quarter increases of 90–95% for Q1 2026, nearly double the firm's initial forecast of 55–60%. PC DRAM specifically is projected to increase by over 100% quarter-over-quarter — the largest quarterly price surge ever recorded for the category. NAND Flash contract prices are expected to rise 55–60% in the same period.

These are not incremental cost adjustments. For IT procurement teams, they represent a fundamental repricing of every hardware category that contains memory, which is to say, virtually everything.

What This Means for Enterprise Hardware

The practical consequences are already surfacing across the supply chain. Intel and AMD have warned customers of delays of up to six months for server CPUs, as packaging capacity and memory availability constrain production. IDC reports that retail PC prices could rise by 20% or more in 2026. Major OEMs, including Dell, Lenovo, and HP, have signaled price increases of 15–20% in early 2026. IDC's pessimistic scenario projects global PC shipments could shrink by up to 9% this year — revised from a 2.5% contraction forecast issued just three months ago.

The smartphone market faces parallel pressure. IDC projects average selling prices could rise by as much as 8% even as the global smartphone market contracts by up to 5.2% in a downside scenario. Xiaomi's CFO publicly warned that memory cost pressures will drive up device prices, with analyst projections showing the company budgeting for a roughly 25% increase in DRAM expense per handset.

SMIC's Warning: The Overcapacity Paradox

Against this backdrop of shortage and price escalation, a counterpoint emerged from China's largest chipmaker. SMIC co-CEO Zhao Haijun warned that the breakneck pace of AI infrastructure buildout risks pulling years of future demand forward, cautioning that companies are trying to build a decade's worth of data center capacity within one or two years. The implication: today's shortage could eventually give way to overcapacity if AI demand growth decelerates or if the current infrastructure buildout overshoots actual workload requirements.

This creates a strategic dilemma for IT leaders. The shortage is real and present, but the possibility of a correction means that locking into long-term premium-priced procurement contracts carries its own risk. Organizations need to balance urgency against the potential for market normalization — a balance that requires active monitoring rather than reactive purchasing.

Applied Materials and the Equipment Layer

The semiconductor equipment sector provided further confirmation of the structural shift this week. Applied Materials reported a Q1 2026 earnings beat that sent its stock up over 11% in a single session, driven by demand for tools supporting Gate-All-Around transistor manufacturing at 2nm nodes and through-silicon via (TSV) processing for advanced memory stacking. The equipment intensity of HBM production — requiring 3–4 times the wafer-start tooling of standard memory — underscores why manufacturing capacity cannot be quickly reallocated or expanded. The bottleneck is not just wafers; it is the machines that process them.

A Practical Framework for IT Leaders

The AI memory crisis is not a problem you can solve, but it is one you can manage. Here is a five-step approach:

- Audit your hardware refresh timeline now. If you have server, workstation, or endpoint refresh cycles planned for Q2 or Q3 2026, evaluate whether accelerating procurement into Q1 — before further price increases — is financially advantageous. The cost of carrying inventory may be less than the cost of waiting.

- Diversify your memory sourcing. Several PC and device manufacturers are evaluating memory from Chinese manufacturers such as ChangXin Memory Technologies (CXMT) as alternatives to Samsung, SK Hynix, and Micron. Work with your OEM partners and distribution channels to understand what alternative sourcing options exist for your configurations without compromising reliability or warranty coverage.

- Reassess cloud vs. on-premises economics. The calculus between owning hardware and consuming it as a service shifts when hardware costs spike. Cloud providers absorb memory price increases across massive procurement volumes and amortize them over multi-year infrastructure lifecycles. For workloads with elastic demand, this may be the moment when cloud migration becomes financially compelling, even if it wasn't previously.

- Model the downstream budget impact. Memory is a component cost, but it propagates through every hardware line item. Servers, storage arrays, networking equipment with onboard memory, and endpoint devices — all will be affected. Build a sensitivity analysis that models 20%, 40%, and 60% increases in memory-dependent hardware costs against your 2026 and 2027 capital budgets.

- Monitor the market weekly, not quarterly. This is a fast-moving situation. TrendForce, IDC, and Bloomberg are publishing updated projections on a near-weekly basis. Assign someone on your procurement or infrastructure team to track contract pricing, OEM announcements, and allocation changes so you can make informed decisions rather than reacting to sticker shock at the point of purchase.

Common Mistakes to Avoid

- Assuming this is a repeat of 2020–2022. The pandemic-era chip shortage was driven by supply chain disruption and demand surges that eventually normalized. This shortage is structural — manufacturers are choosing to produce less consumer and enterprise memory because AI memory is more profitable. The resolution mechanism is different, and the timeline is longer.

- Delaying procurement, hoping for price relief. Every major forecasting firm projects continued price escalation through at least mid-2026. Waiting is a strategy, but it is currently a losing one.

- Ignoring the endpoint refresh. Organizations focused on server and data center infrastructure may overlook the impact on PCs, laptops, and mobile devices. With potential 20% price increases on endpoints, a 5,000-device refresh cycle could result in cost overruns measured in the millions.

- Treating this as purely a procurement problem. The memory crisis intersects with architectural decisions. Workload placement, storage tiering, memory optimization in application design, and infrastructure right-sizing all become levers when memory is scarce and expensive.

The Bigger Picture

The AI memory crisis is a preview of a broader tension that will define enterprise technology for the next several years: AI infrastructure demand is reshaping the global semiconductor supply chain in ways that impose real costs on every other technology consumer. The companies building AI platforms are willing to pay premium prices for scarce manufacturing capacity, and the rest of the market — from healthcare IT to retail point-of-sale to corporate endpoint fleets — absorbs the consequences.

For IT leaders, the strategic response is not to resist this shift but to adapt to it: by proactively procuring, efficiently architecting, and maintaining market intelligence to make informed timing decisions. If your organization needs help modeling budget impact, evaluating cloud migration economics, or building a procurement strategy that accounts for sustained component inflation, our team can help you navigate this landscape with a clear-eyed assessment of both risks and opportunities.

Sources

- Samsung Global Newsroom: Samsung Ships Industry-First Commercial HBM4

- Bloomberg: AI Boom Driving a Global Memory Chip Shortage, Sending Prices Soaring (February 15, 2026)

- Bloomberg: AI Industry Is Gobbling Up Memory Chips, Causing Critical Shortage (February 16, 2026)

- TrendForce: AI to Consume 20% of Global DRAM Wafer Capacity in 2026

- IDC: Global Memory Shortage Crisis — Impact on Smartphone and PC Markets in 2026

- Tom's Hardware: IDC Warns PC Market Could Shrink Up to 9% in 2026 Due to RAM Pricing

- Taipei Times: Samsung Starts Mass Production of Next-Gen AI Chips (February 13, 2026)

- Taipei Times: SMIC Says Rushed AI Chip Capacity Could End Up Idle (February 12, 2026)

- VideoCardz: Samsung Begins HBM4 Mass Production — Up to 3.3 TB/s Per Stack

- Rest of World: AI Is Dominating the World's Memory Chips

- SEMI: 2025 Annual Worldwide Silicon Wafer Shipments and Revenue Results

- Financial Content: The AI Giga-Cycle — Applied Materials 2026 Breakout (February 16, 2026)