The AI Trust Crisis: When Sponsored Content Meets Stealth Attacks

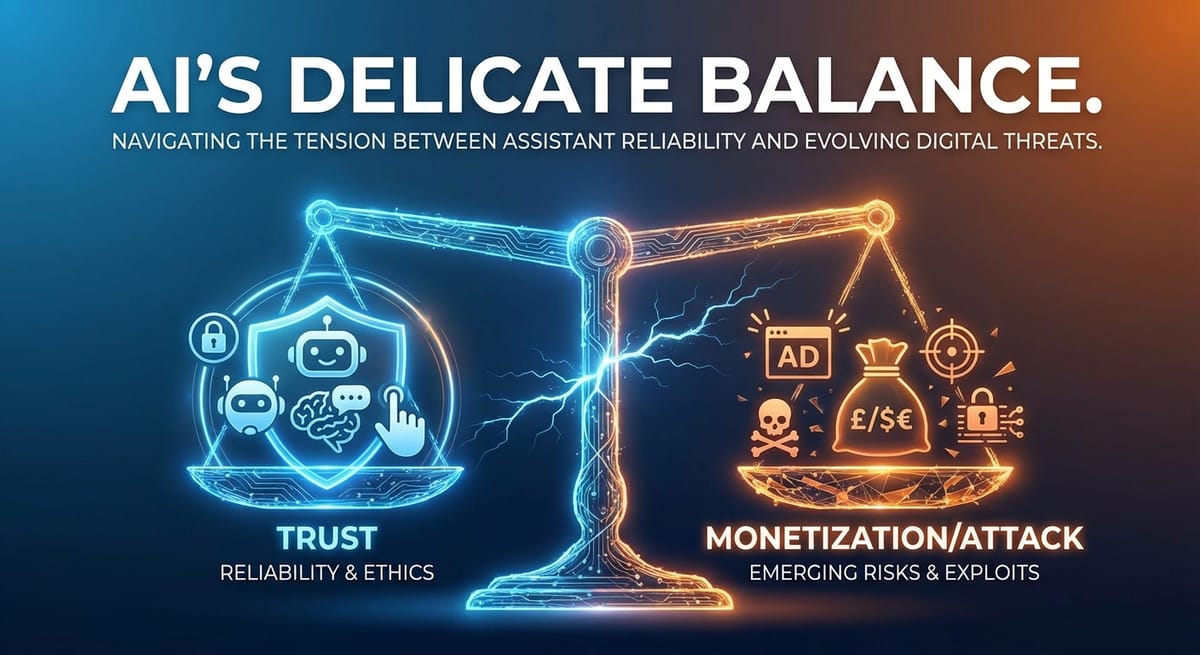

Last week, delivered low confidence in the enterprise's AI assistants. On one front, reports emerged that OpenAI is exploring ways to prioritize sponsored content within ChatGPT responses. On the other hand, security researchers continue to expose vulnerabilities in AI chatbots that allow attackers to hijack conversations and exfiltrate sensitive data. For IT leaders and security professionals, these developments threaten trust, integrity, and security, core elements that require immediate attention.

The message is clear: the AI tools your organization depends on may soon serve interests other than your users'—and attackers are already finding ways to exploit the gaps.

OpenAI's Advertising Ambitions: What We Know

According to reporting from The Information and BleepingComputer, OpenAI is actively advancing plans to introduce advertising into ChatGPT—and the approach goes far beyond simple banner ads.

Internal discussions reportedly include options to prioritize sponsored content directly within the AI-generated answers. Ad mockups have included sponsored information displayed in a sidebar alongside the main ChatGPT response window, with some proposals going further by embedding promotional content into conversational outputs.

OpenAI confirmed the exploration, stating that as ChatGPT becomes more widely used, the company is "looking at ways to continue offering more intelligence to everyone" and that any approach "would be designed to respect that trust."

However, the implications for enterprise users are significant.

Why This Matters for Enterprise AI Strategy

Data Depth Creates Targeting Precision: ChatGPT likely possesses more contextual information about individual users than traditional advertising platforms. Unlike search engines that see queries, conversational AI accumulates context across extended interactions—understanding projects, preferences, pain points, and decision-making processes. This creates unprecedented targeting potential.

Trust architecture at risk: Enterprise users increasingly rely on AI assistants for research, analysis, and recommendations. If responses are influenced by paid placements, the core value of AI-assisted decision-making diminishes. When your 'trusted advisor' has undisclosed financial incentives, it can undermine confidence and trust in your organization's AI tools.

Disclosure Ambiguity: Early evidence suggests the line between "recommendation" and "advertisement" may be intentionally blurred. When a ChatGPT Plus user received an unsolicited shopping recommendation for Target within an unrelated technical response, OpenAI characterized it not as an ad but as an "app recommendation" from a pilot partner, with efforts to make discovery "more organic." For most observers, the distinction is semantic—a branded call-to-action within paid software is functionally an advertisement.

The Parallel Threat: AI Chatbot Vulnerabilities Under Attack

While the advertising discussion focuses on intentional content manipulation, security researchers are documenting how attackers exploit technical weaknesses. Recognizing these vulnerabilities is crucial for maintaining confidence in your AI systems and protecting your organization's reputation.

This week's ThreatsDay Bulletin from The Hacker News catalogued multiple AI-related vulnerabilities that should concern every organization deploying conversational AI in production environments.

Docker's Ask Gordon Prompt Injection

Docker patched a significant prompt injection vulnerability in Ask Gordon, its AI assistant embedded in Docker Desktop and the CLI. The flaw, discovered by Pillar Security, allowed attackers to hijack the assistant by poisoning the metadata of Docker Hub repositories with malicious instructions.

The attack vector was elegant and concerning: an adversary could create a malicious Docker Hub repository containing crafted instructions that triggered automatic tool execution when unsuspecting developers asked the chatbot to describe the repository. The AI's inherent trust in Docker Hub content became its weakness.

"By exploiting Gordon's inherent trust in Docker Hub content, threat actors can embed instructions that trigger automatic tool execution—fetching additional payloads from attacker-controlled servers, all without user consent or awareness," noted security researcher Eilon Cohen.

The vulnerability was addressed in Docker Desktop version 4.50.0, but the pattern it exposed—AI assistants trusting external data sources that attackers can manipulate—remains endemic across the ecosystem.

Eurostar Chatbot: Guardrails Bypassed

Researchers demonstrate how vulnerabilities like prompt injection can be exploited; organizations must develop detection mechanisms, such as anomaly detection and response protocols, to identify and mitigate these attacks in real time.

Additional issues included the ability to modify message IDs (potentially enabling cross-user compromise) and the injection of HTML code due to insufficient input validation.

"An attacker could exfiltrate prompts, steer answers, and run scripts in the chat window," Pen Test Partners reported. "The core lesson is that old web and API weaknesses still apply even when an LLM is in the loop."

AI Agents: Autonomous Exploitation Becomes Reality

Perhaps most concerning, Anthropic's Frontier Red Team disclosed that AI agents—including Claude Opus 4.5, Claude Sonnet 4.5, and GPT-5—discovered independently $4.6 million in exploits in blockchain smart contracts. Both agents uncovered two novel zero-day vulnerabilities, demonstrating profitable, real-world autonomous exploitation as technically feasible.

This represents a paradigm shift. AI systems aren't just targets for exploitation—they're becoming capable exploiters themselves, raising fundamental questions about how we secure systems against adversaries that learn and adapt autonomously.

The Broader Threat Landscape: What Else Demands Attention

Beyond AI-specific concerns, this week's security developments underscore the expanding attack surface organizations must defend.

NFC-Abusing Android Malware Surges 87%

ESET data revealed dramatic growth in NFC-based Android malware between the first and second halves of 2025. Modern variants like PhantomCard combine NFC exploitation with remote access trojans and automated transfer capabilities, harvesting payment card data and PINs while disabling biometric verification.

Fake PoC Exploits Target Security Researchers

Threat actors are now weaponizing the security community's own workflows, distributing WebRAT malware through GitHub repositories that impersonate proof-of-concept exploits for vulnerabilities like CVE-2025-59295 and CVE-2025-10294. The repositories feature professional-looking documentation—likely machine-generated—to build credibility before delivering malicious payloads.

GuLoader Campaigns Peak

The commodity loader GuLoader (CloudEye) reached new distribution heights between September and November 2025, with multi-stage infection chains featuring heavy obfuscation designed to evade signature-based detection.

Russian Influence Operations Scale with AI

The CopyCop influence network (Storm-1516) is deploying more than 300 inauthentic websites disguised as local news outlets, using self-hosted uncensored LLMs to generate thousands of fake stories daily. The integration of AI into disinformation demonstrates how generative models amplify adversary capabilities across domains.

Strategic Recommendations: Navigating the AI Trust Paradox

Organizations face a complex calculus: AI tools deliver genuine productivity gains, but the integrity of their outputs is increasingly uncertain—whether from commercial incentives or adversarial manipulation. Here's how to adapt.

For AI Governance

Implement output validation workflows. Treat AI-generated content as a draft requiring human verification, particularly for decisions with financial, legal, or strategic implications. Cross-reference recommendations against independent sources before acting.

Establish disclosure requirements. Push vendors to be transparent about how monetization affects model behavior. Include specific contractual language prohibiting undisclosed paid placements in enterprise agreements.

Consider alternative architectures. On-premises or private cloud deployments of open-source models offer greater control over output integrity, though they require additional infrastructure and expertise investment.

Audit AI integrations regularly. Any system in which AI assistants interact with external data sources (such as Docker Hub, code repositories, or web content) represents a potential prompt-injection vector. Map these integration points and assess trust boundaries.

For Security Operations

Update threat models for AI attack surfaces. Traditional web and API vulnerabilities persist in AI-powered applications. Ensure penetration testing and security assessments explicitly include conversational interfaces.

Monitor for AI-specific IOCs. Watch for unusual patterns in AI assistant behavior—unexpected tool calls, data exfiltration attempts, or outputs inconsistent with user queries.

Protect developer workflows. Security researchers and developers are now explicit targets. Verify the provenance of any "proof-of-concept" code before execution, and treat GitHub repositories with suspicion even when they appear professional.

Prepare for autonomous threats. The demonstrated ability of AI agents to discover and exploit vulnerabilities independently suggests that static defenses will become less effective. Invest in behavioral detection and anomaly identification capabilities.

For Executive Leadership

Communicate the changing risk landscape. The tools marketed as productivity enhancers carry emerging risks that may not be apparent to non-technical stakeholders. Ensure leadership understands that "AI-assisted" doesn't mean "AI-verified."

Budget for AI security. Traditional security spending assumptions don't account for the additional attack surface introduced by AI integrations. Allocate resources specifically for AI governance, testing, and monitoring.

Establish ethical use policies. Define organizational standards for AI tool usage that account for both security risks and potential conflicts of interest from monetized outputs.

Common Mistakes to Avoid

Assuming AI output neutrality. Whether influenced by advertising or prompt injection, AI responses may serve interests other than those of users. Treat outputs with appropriate skepticism.

Treating AI chatbots as secure applications. The Eurostar and Docker vulnerabilities demonstrate that AI interfaces inherit traditional web and API security weaknesses. Apply the same security scrutiny you would to any customer-facing application.

Ignoring the supply chain. AI systems often consume external data—from web searches to repository metadata to API integrations. Each source represents a potential manipulation vector.

Delaying governance decisions. The advertising and security landscapes are evolving rapidly. Organizations that defer AI governance frameworks will find themselves adapting to vendor-imposed realities rather than shaping acceptable use terms.

Looking Ahead

The developments of the past week represent an inflection point. AI assistants are transitioning from tools that serve user interests exclusively to platforms with multiple stakeholders—including advertisers and, inevitably, attackers who exploit the complexity this creates.

For IT professionals, this isn't cause for abandoning AI initiatives but rather for implementing the governance, security, and verification frameworks that mature technology deployment requires. The organizations that navigate this transition successfully will be those that approach AI with the same rigor they apply to any business-critical system: trust, but verify.

Sources: BleepingComputer, The Information, The Hacker News ThreatsDay Bulletin, Pillar Security, ESET, Pen Test Partners, Anthropic Frontier Red Team